AI: Ethics, Risks, and Responsibility

AI is changing industries and shaping the future in ways we never thought possible. From healthcare to automating entire sectors, its impact is massive. But with all this rapid growth comes a bunch of new problems we need to face. Things like bias in AI, privacy issues, and figuring out who's responsible when things go wrong have really started getting people talking. It’s a reminder that tech moving fast doesn’t mean we should forget about the responsibility that comes with it.

Bias in AI

AI learns from data, but when that data is biased, it just makes inequality even worse. For example, hiring algorithms might favor certain groups while leaving others out, or healthcare systems might misdiagnose minorities because of inaccurate data. These AI systems don’t just reflect reality—they make it worse by making these problems even bigger.

A good example of this is happening in Pakistan’s political landscape. Social media platforms, driven by AI, have made the divide between people even wider by focusing on engagement over truth. False narratives spread, and the algorithms just push more biased content rather than working to stop it. This shows why we really need AI that’s more fair, transparent, and focused on bringing people together instead of just making money.

Privacy Concerns

AI thrives on data, but at what cost? The trade-off between convenience and privacy has never been clearer. Governments are using AI for surveillance under the idea of “security,” but it raises huge ethical questions about where to draw the line. Countries like China are leading the way in AI monitoring, but it’s a global issue.

In Pakistan, for example, weak privacy laws have let AI surveillance grow without much regulation, leaving people exposed. We need stronger rules, more awareness, and better data policies to make sure AI is being used responsibly.

Accountability and Transparency

AI’s ability to make its own decisions adds another layer of confusion when it comes to accountability. If a self-driving car crashes or an algorithm unfairly targets someone, who’s to blame? The programmer? The company? Or the AI itself? This lack of clarity could hurt people’s trust in AI, and it’s something we have to fix.

We need clear rules for accountability, and that means AI systems need to be more transparent. Developers, companies, and governments all need to work together to make sure accountability grows as AI tech does.

Global Efforts Toward Ethical AI

As AI keeps growing, there are a lot of efforts worldwide to fix these issues. The New York Academy of Sciences, for example, is bringing together young researchers, engineers, and policymakers to solve problems like bias and transparency. Their work on things like ethical AI in finance shows how much can be done when different fields come together.

Then there’s the European Union’s AI Act and OpenAI’s push for transparency, both part of the global effort to make sure AI is developed ethically. These global movements remind us that AI ethics is not just about tech; it’s a problem for everyone.

Conclusion

AI is powerful, and it has the ability to change the world. But it comes with serious risks. From Pakistan’s political divide to global efforts by the New York Academy of Sciences, it’s clear we all need to pay attention to how AI shapes our world. If we build fairness, accountability, and privacy into AI, we can make sure it’s used in a way that actually benefits people—not just tech for tech’s sake.

Similar Post You May Like

-

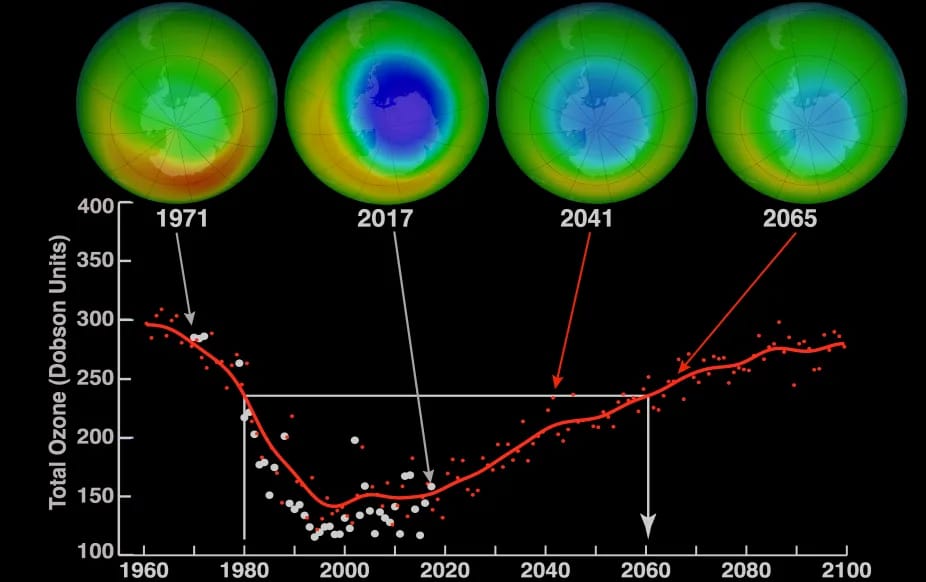

CFCs, HFCs and their long, troubled history

At its peak, the ozone hole covered an area 7 times larger than the size of Europe, around 29.9 million km2, and was rapidly expanding

-

The Origin of Universe: Deciding point where it all began!

Let us unravel and surf through the ideas throughout ages to understand what the universe and its origin itself was to its inhabitants across history.

-

The Artemis Program

Inspired by the Greek goddess of the Moon, twin sister to Apollo, the artimis program was named on 14 May 2019 by Jim Bridenstine.