LangChain: The Developer’s Toolkit for Building LLM-Powered Reality

Abstract

Building real-world applications using Large Language Models (LLMs) requires more than just clever prompting. LangChain is a framework that helps developers move from single-shot queries to intelligent systems that remember, reason, and interact with the world. This article explores LangChain’s core architecture—chaining logic, memory modules, tool integrations—and how it’s enabling applications that were once stuck in science fiction. We’ll also reflect on how LangChain is shifting the developer mindset from “prompting” to “orchestrating,” and what that means for the future of LLM-based systems.

When Prompts Aren’t Enough Anymore

Let’s be honest: working with LLMs like GPT-4 is incredible, but also… limited. Anyone who’s tried to build a chatbot or assistant quickly discovers that prompting alone won’t cut it. What happens when your app needs to remember past interactions, pull in data from a Google Sheet, translate it, and summarize it—all in one go?

That’s where LangChain comes in.

LangChain isn’t a new model or algorithm. It’s a developer-first framework that helps you connect the dots between language models, external tools, and complex workflows. You can think of it like the difference between a talented soloist (an LLM) and a full orchestra (LangChain directing how and when different instruments—tools—come in).

Under the Hood: How LangChain Works

At its core, LangChain gives you tools to manage prompts, build chains of logic, store memory, and use external services in sync with your LLM. Let’s break that down:

Prompt Templates

Forget hardcoding static strings. LangChain lets you define prompt templates that accept dynamic variables. This makes it easy to reuse and adjust prompts on the fly, making your app flexible, adaptive, and a lot easier to debug.

Python Prompt:

PromptTemplate(

input_variables=["query"],

template="Explain {query} to a five-year-old."

)

Chains

Sometimes one LLM call isn’t enough. You might need to summarize a document, translate it, and then generate quiz questions. LangChain supports different chain types, like:

- SimpleSequentialChain: One output feeds the next.

- SequentialChain: More structured, with input/output mapping.

- Custom Chains: Build your own sequence logic.

This chaining concept is crucial—it’s how developers move beyond the “single prompt = single response” limitation.

Memory

One of the biggest complaints with LLMs? They forget everything. LangChain fixes that with memory modules:

- BufferMemory: Keeps full chat history.

- SummaryMemory: Recaps past sessions so models don't lose context.

Now your bot can remember what you told it yesterday—or last week.

Agents and Tools

This is where LangChain feels most futuristic. LangChain’s most powerful trick is its agent interface. Agents are like little LLM-powered decision-makers. They choose which tools to use (search engine, calculator, API, etc.) based on the input they get. For instance:

“What’s the weather in Tokyo, and how does it compare to Lahore right now?”

An agent might fetch real-time weather for both cities, compare them, and reply with insights—all in one conversation.

It’s Already Changing How We Build

LangChain isn’t just academic—it’s already making waves. Developers are using it to build:

- AI customer support assistants that handle follow-ups without forgetting your name.

- Medical triage tools that walk patients through symptoms step-by-step.

- Legal AI agents that parse laws and fill out documents with user input.

- Academic research assistants that summarize papers and track references.

These aren’t toy apps. They're the beginnings of practical, reliable AI systems.

Pros, Cons, and Honest Reflections

What It Gets Right:

- Modularity: You can plug and play components.

- Scalability: Add memory, tools, and logic as needed.

- Developer-first: It’s written in Python, well-documented, and rapidly evolving.

Where It Still Hurts:

- Learning Curve: For newcomers, the abstractions can feel heavy.

- Latency: More chains = more delay, especially with API calls.

- Security Risks: Tool integrations must be securely handled—especially when dealing with finance or health data.

That said, none of these issues are dealbreakers. They’re signs that the framework is growing up, fast.

LangChain vs. “Just the API”

| Capability | OpenAI API alone | LangChain |

|---|---|---|

| Prompt response | True | True |

| Chained reasoning | False | True |

| Conversation memory | Manual only | True |

| Tool usage | False | True |

| Decision-making agents | False | True |

LangChain is what you reach for when your idea needs more than just clever prompting.

Looking Forward

LangChain isn’t stopping here. The team behind it is launching:

- LangSmith: A debugging and testing environment for chains.

- LangServe: A way to serve your chains as APIs.

Upcoming tools like LangSmith (for debugging/testing) and LangServe (to deploy chains as API) are transforming LangChain from a code library into a full-stack platform for AI applications.

Conclusion

We’re witnessing a shift in how developers approach AI. Prompting is just the beginning. LangChain invites us to think in terms of workflows, memory, decisions, and integrations. It doesn’t replace language models—it empowers them.

Whether you're building an intelligent assistant or an entire AI product, LangChain gives you the building blocks to go from "cool demo" to "real solution."

References

- Chase, H. (2023). LangChain Documentation. Retrieved from https://docs.langchain.com

- OpenAI. (2023). Introducing GPT-4. Retrieved from https://openai.com/research/gpt-4

- Zhang, X., & Lee, M. (2023). Architectures for Language Model Integration. Journal of AI Systems, 12(3), 213–225. https://doi.org/10.1016/j.jais.2023.03.012

Similar Post You May Like

-

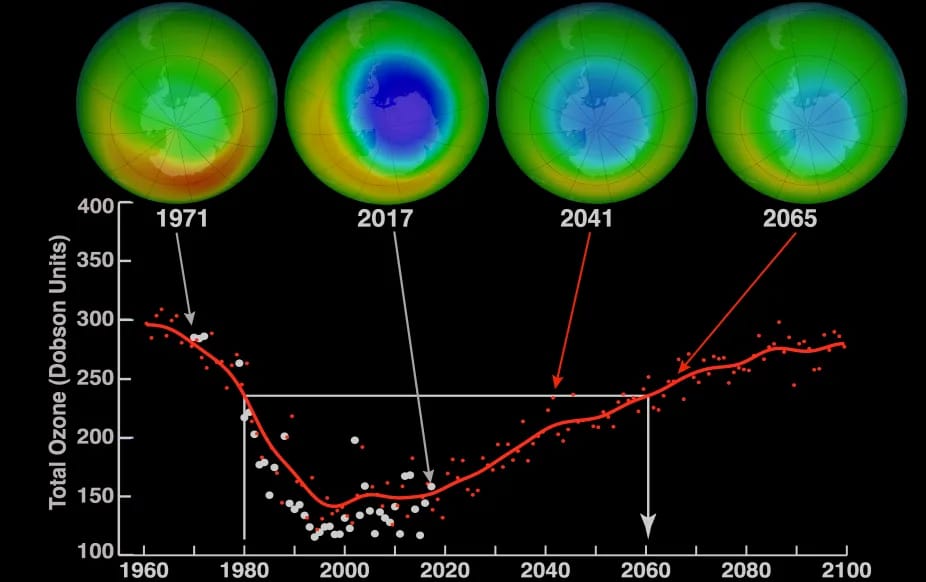

CFCs, HFCs and their long, troubled history

At its peak, the ozone hole covered an area 7 times larger than the size of Europe, around 29.9 million km2, and was rapidly expanding

-

The Origin of Universe: Deciding point where it all began!

Let us unravel and surf through the ideas throughout ages to understand what the universe and its origin itself was to its inhabitants across history.

-

The Artemis Program

Inspired by the Greek goddess of the Moon, twin sister to Apollo, the artimis program was named on 14 May 2019 by Jim Bridenstine.